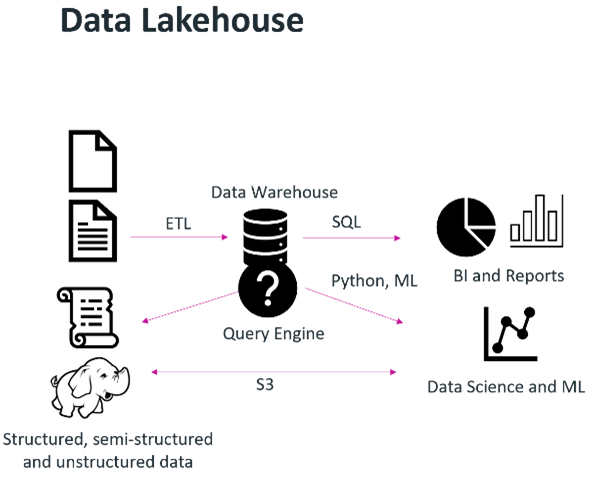

The evolution of data analytics platforms has seen a significant shift from traditional data warehouses to modern data lakehouses, driven by the need for more flexible and scalable data management solutions.

The Shift in Data Management

Historically, organizations relied heavily on data warehouses for structured data analysis. These systems excelled at executing specific queries, particularly in business intelligence (BI) and reporting environments. However, as data volumes grew and diversified—encompassing structured, semi-structured, and unstructured data—the limitations of traditional data warehouses became apparent.In the mid-2000s, businesses began to recognize the potential of harnessing vast amounts of data from various sources for analytics and monetization. This led to the emergence of the “data lake,” designed to store raw data without enforcing strict quality controls. While data lakes provided a solution for storing diverse data types, they fell short in terms of data governance and transactional capabilities.

The Role of Object Storage

The introduction of object storage, particularly with the standardization of the S3 API, has transformed the landscape of data analytics. Object storage allows organizations to store a wide array of data types efficiently, making it an ideal foundation for modern analytics platforms.Today, many analytics solutions, such as Greenplum, Vertica, and SQL Server 2022, have integrated support for object storage through the S3 API. This integration enables organizations to utilize object storage not just for backups but as a primary data repository, facilitating a more comprehensive approach to data analytics.

The Benefits of Data Lakehouses

The modern data lakehouse architecture combines the best features of data lakes and data warehouses. It allows for the decoupling of storage and compute resources, supporting a variety of analytical workloads. This flexibility means that organizations can access and analyze their entire data set efficiently using standard S3 API calls.

Key Advantages:

- Scalability: Object storage can grow with the organization’s data needs without the constraints of traditional storage solutions.

- Versatility: Supports diverse data types and analytics use cases, making it suitable for various business applications.

- Cost-Effectiveness: Provides a more affordable storage solution, particularly for large volumes of data.

Conclusion

The evolution from data warehouses to data lakehouses represents a significant advancement in data analytics capabilities. By leveraging object storage and the S3 API, organizations can now manage their data more effectively, enabling deeper insights and better decision-making. For more detailed insights and use cases, explore Cloudian’s resources on hybrid cloud storage for data analytics.

Cyber Whale is a Moldovan agency specializing in building custom Business Intelligence (BI) systems that empower businesses with data-driven insights and strategic growth.

Let us help you with our BI systems, let us know at hello@cyberwhale.tech